From my experience commercial testing of software consists of manual qa (grunt labor). Little is automated. I personally feel that even with a slight investment by developers to create unit tests, that effort would pay off later (especially in the crunch time before a big release where fixing a bug may cause others).

I guess it is hard to justify ROI on testing, thus there is little emphasis on it. Now let's leave the cathedral and head to the bazaar.... Which leads me to another chart.

I recently patched and help port two open source projects, and when I asked the main developers if they had unit tests, they indicated they only did manual testing. This is pretty widespread among smaller projects and I would venture to say many desktop apps.

Yet as projects grow bigger they tend to have some sort of unit or regression tests, and testing improves.... or does it?

Within the past two days I read two articles that highlight the continuum of different levels of testing done to open source software. One was a slashdot article asking if there were any opensource tools available for load testing and qa? It wasn't even given space on the frontpage of slashdot (shows how important testing is to the editors of slashdot...), and recieved very few responses. It seems like most people buy an expensive solution or roll their own. (Much $ spent or much re-creation of the wheel).

The second discussed Coverity's static analysis of FreeBSD declaring that they found a potential of 306 bugs. We've all heard the argument that "many eyes make bugs shallow". There is some truth to that, and you see it in the bigger more widespread projects. As people use the project in their own way, they find bugs, and (hopefully) either report them or send patches. But usually this is an uncoordinated effort.

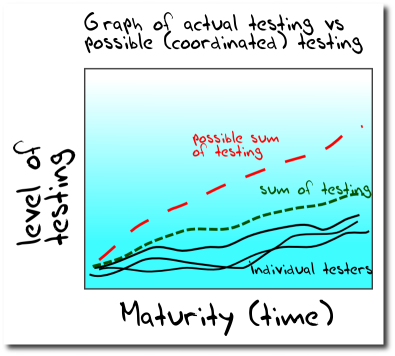

There is little aggregation of tests (other than unit tests). Also, eyes aren't as able to catch things that tend to get exposed during a good load test. (I'll call the green line the sum of all the testing that goes into a project). When performance, stress, or load tests are done, are they being returned to the project and aggregated there? How much of this is repeated by end users who perform these types of tests? How much is implemented in house (not by the project)? How much time and energy could be saved (or put into new testing) if this information was aggregated and shared (see red line in chart)?

Maybe testing just doesn't itch very much, or we don't like to scratch it.... Any examples of projects that are particularly good at testing?